Fields of The World: A Machine Learning Benchmark Dataset For Global Agricultural Field Boundary Segmentation

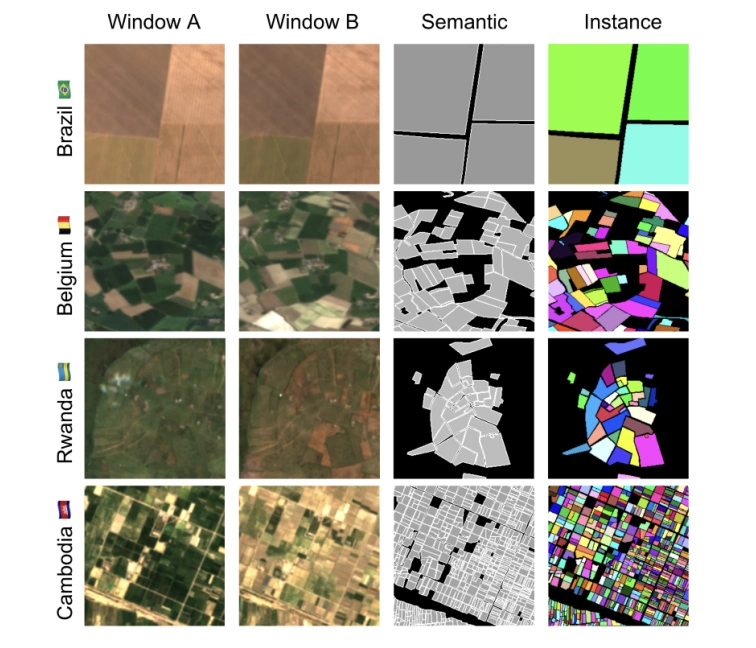

Training samples from four continents, demonstrating the diversity within Fields of The World.

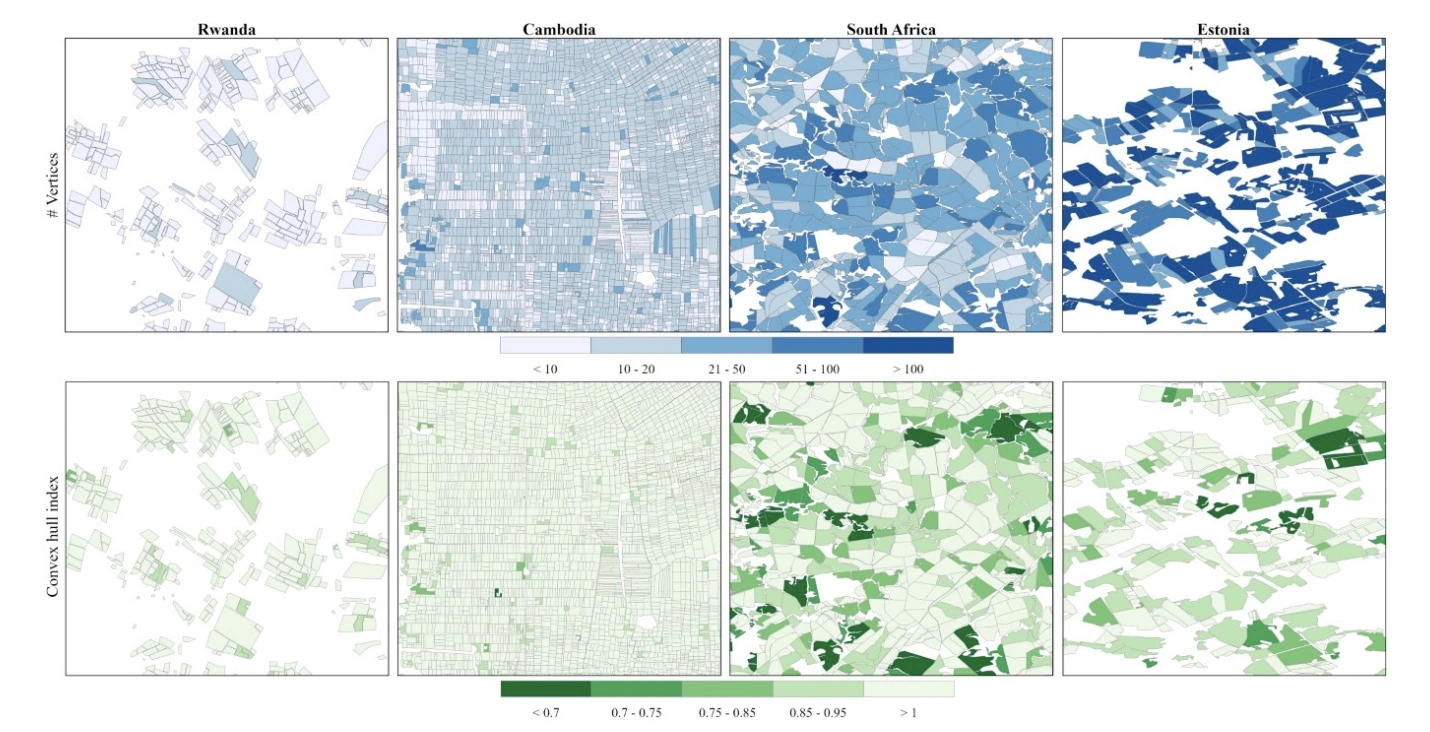

Visualization of the number of Field polygon vertices (above) and convex hull index.

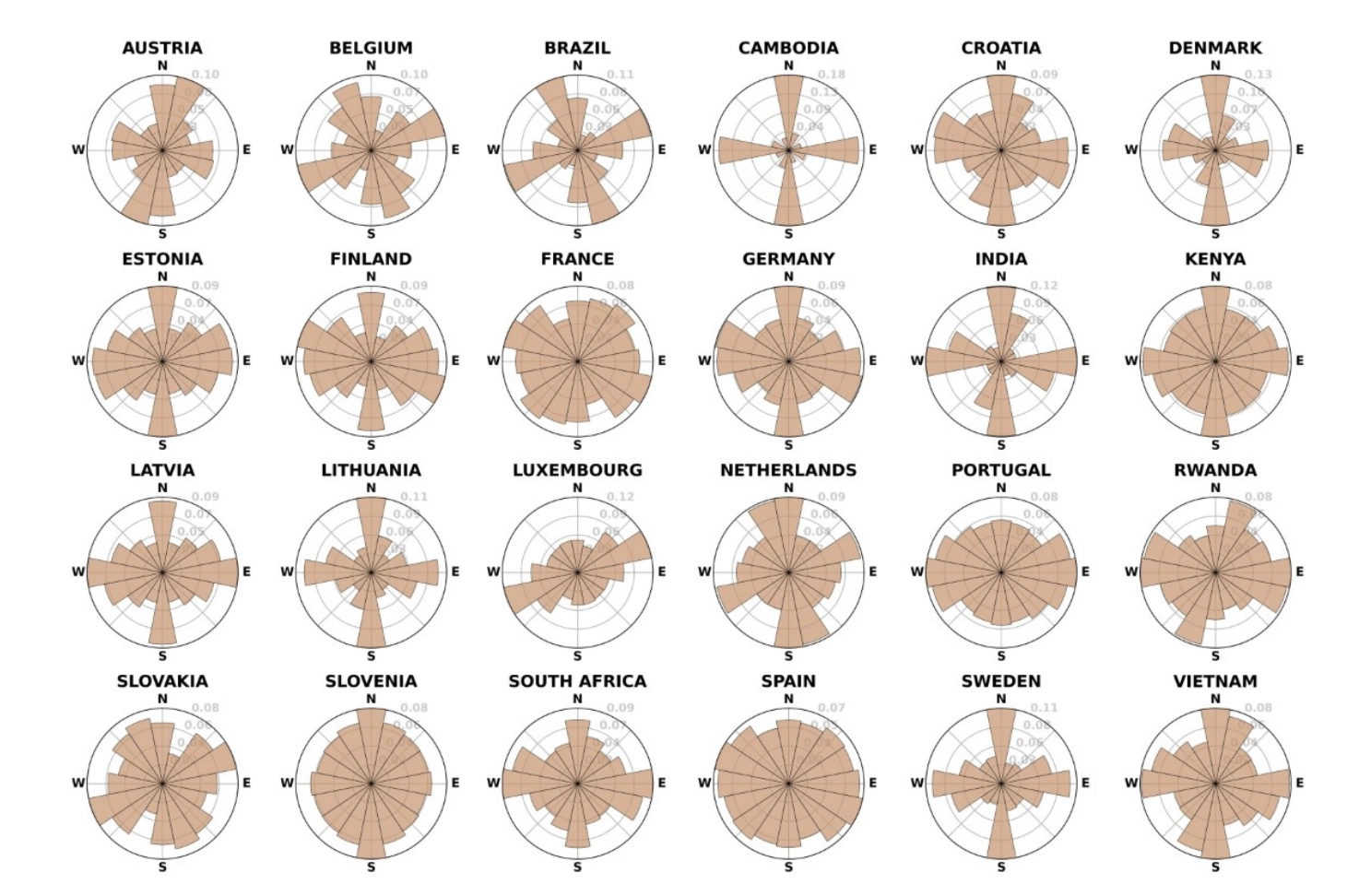

Field orientation histograms of all countries in the Fields of The World (FTW) dataset.

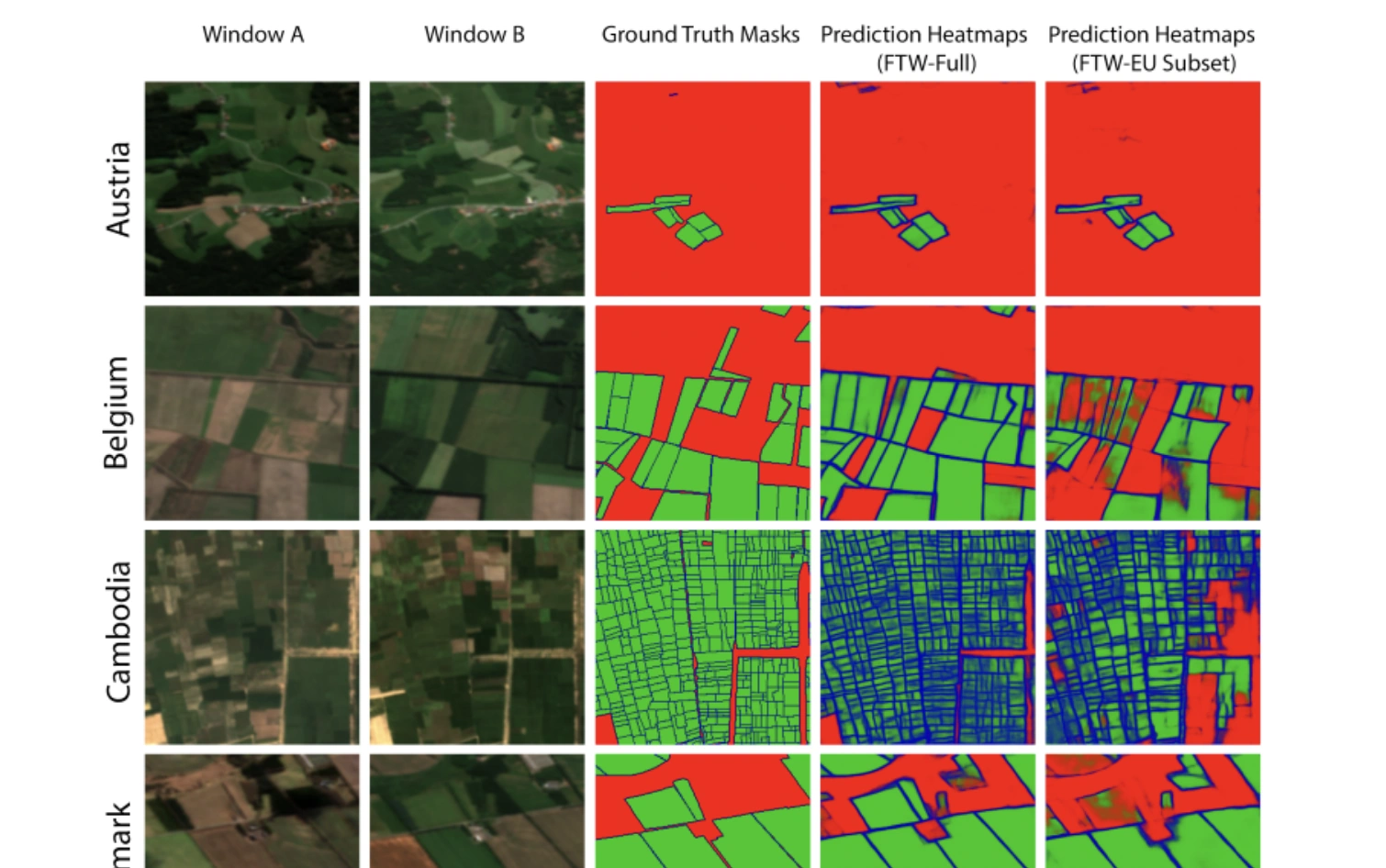

Prediction samples from Presence-Only countries (R: Background, G: Fields, B: Boundaries).

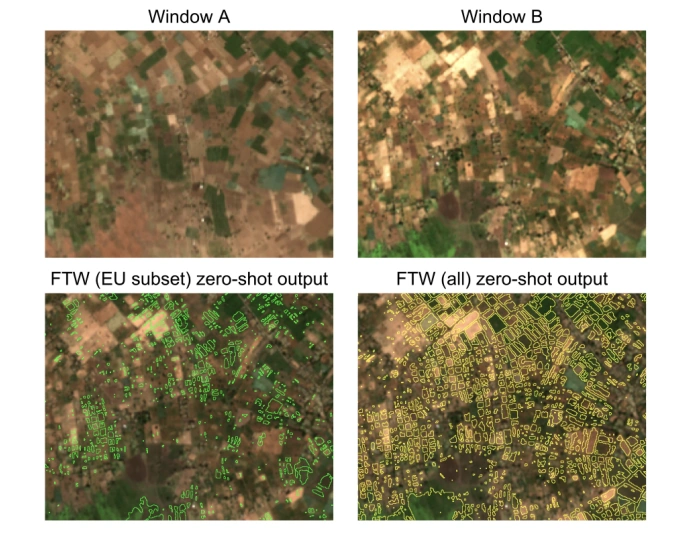

Zero-shot predictions with no post-processing for a 20 sq km region in Ethiopia

Abstract

Crop field boundaries are foundational datasets for agricultural monitoring and assessments but are expensive to collect manually. Machine learning (ML) methods for automatically extracting field boundaries from remotely sensed images could help realize the demand for these datasets at a global scale. However, current ML methods for field instance segmentation lack sufficient geographic coverage, accuracy, and generalization capabilities. Further, research on improving ML methods is restricted by the lack of labeled datasets representing the diversity of global agricultural fields. We present Fields of The World (FTW) -- a novel ML benchmark dataset for agricultural field instance segmentation spanning 24 countries on four continents (Europe, Africa, Asia, and South America). FTW is an order of magnitude larger than previous datasets with 70,462 samples, each containing instance and semantic segmentation masks paired with multi-date, multi-spectral Sentinel-2 satellite images. We provide results from baseline models for the new FTW benchmark, show that models trained on FTW have better zero-shot and fine-tuning performance in held-out countries than models that aren't pre-trained with diverse datasets, and show positive qualitative zero-shot results of FTW models in a real-world scenario -- running on Sentinel-2 scenes over Ethiopia.

BibTeX

@article{kerner2025fields,

title={Fields of The World: A Machine Learning Benchmark Dataset for Global Agricultural Field Boundary Segmentation},

volume={39},

url={https://ojs.aaai.org/index.php/AAAI/article/view/35034},

DOI={10.1609/aaai.v39i27.35034},

number={27},

journal={Proceedings of the AAAI Conference on Artificial Intelligence},

author={Kerner, Hannah and Chaudhari, Snehal and Ghosh, Aninda and Robinson, Caleb and Ahmad, Adeel and Choi, Eddie and Jacobs, Nathan and Holmes, Chris and Mohr, Matthias and Dodhia, Rahul and Lavista Ferres, Juan M and Marcus, Jennifer},

year={2025},

month={Apr.},

pages={28151-28159}

}